In the last few posts, we’ve been looking at algorithms that combine a number of simple models/distributions to form a single more complex and sophisticated model. With both neural networks and decision trees/random forests, we were interested in the classification problem: given a set of data points in different categories/classes, predict the class of a new data point. For today’s post on mixture models, we’ll focus on the modeling problem, i.e. determining a single distribution that describes the structure of the overall data set. One version of this that we looked at early on was regression, in which we tried to predict the value of one dimension/feature of a new data point based on the values of its other dimensions. A mixture model, however, is designed to produce descriptions of more complicated data sets, for which you wouldn’t necessarily expect to be able to predict values in the way that you do with regression.

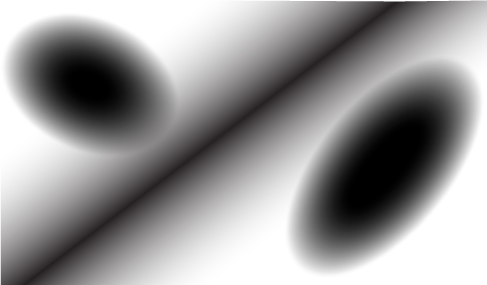

The idea behind a mixture model is that the underlying distribution for a data set may have different types of local structure in different parts of the data space, as in the Figure below. For example, if different data points are the result of different phenomena (for example, different species in a data set of animals, or different demographics of customers in a marketing data set) then we would expect each phenomenon to be described by a different model. We can get a model for the entire data set by combining the models for all the species/demographics/etc.

We’ve now seen a number of different models that we might want to combine. There’s the linear regression model, where we choose a line/plane/hyperplane and define the density/probability of a point to be the Gaussian function of the distance from the point to the line/plane hyperplane. In other words, the probability is highest right on the line/plane/hyperplane and gets closer to zero as you get farther away. Such a distribution is shown cutting diagonally across the Figure above. We later saw the logistic distribution, where we again choose a line/plane/hyperplane, but this time the density/probability gets closer to one as you get farther away on one side of the line/plane/hyperplane, but gets closer to zero as you get farther away on the other side.

A third type of model/distribution that we saw, but I didn’t describe in quite as much detail, is the Gaussian blob. The basic Gaussian blob is what you get by choosing a point in your data space and defining the densisty/probability of the distribution to be the Gaussian function of the distance from the point. So, the density is highest right at the point, then gets closer to zero as you get farther away. A Gaussian blob is similar to the regression distribution, except that it’s based on a point rather than a line/plane/hyperplane. You can then create more sophisticated Gaussian blobs by stretching this blob in different directions. The Figure above shows two Gaussian blobs, on on either side of the regression distribution. This was the distribution that was implicit in the PCA algorithm from a few posts back.

We can also take any one of these three models and apply a kernel to it. In fact, we could apply a different kernel to each individual simple distribution in a mixture model before mixing them, and/or we could apply a single kernel to the entire mixture at the end of the process. However, lets not make things too complicated for now.

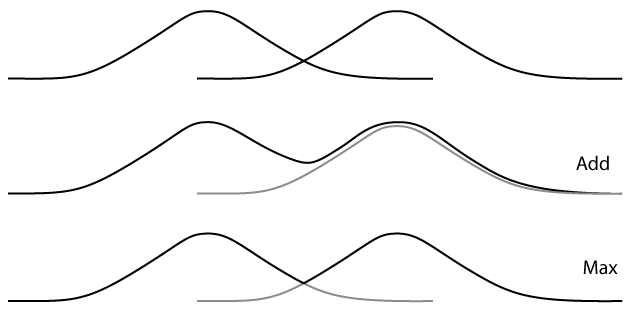

The next step is to combine these different distributions, and there are two basic ways we can do it. Recall that each simple distribution defines a probability/density value for each point in the data space. We need to use these different density values at each given point to decide what its probability/density in the mixture model should be. The first possible way is to add together the values the densities of the simple distributions. This is roughly what we would get if we printed each distribution on a sheet of clear plastic (like an old-fashioned overhead projector slide) and then stacked them all on top of each other: The overlaps between the densities add together to give us the overall density.

Another way to think about this is to think of the simple distributions as piles of sand. From this perspective, a regression distribution looks like a long, narrow ridge, something like what a gopher makes when it digs a tunnel near the surface of your lawn. A logistic function will look like the edge of a plateau – very flat on either side, but with a steep wall going up from the lower side to the higher side. A Gaussian blob will look like a single mountain peak, though its base may be an ellipse rather than a perfect circle. When we add the distributions, we pile them on top of each other like the middle picture on the Figure below, where we combine the two Gaussian distributions shown at the top of the Figure.

The second possibility is to take the maximum density of all the simple models. To picture this, we’ll again think of each simple distribution as a mountain whose height is determined by its density. To combine by the maximum method, we’ll place them all in a mountain range, as if they were holograms that can pass through each other rather than piling on each other, as in the bottom picture in the Figure above. With this method, each simple distribution will peak above the others in certain places, but will be below other distributions in other spots. The density of the mixture distribution will be the overall height of this range at any given point.

These two ways of combining distributions seem very different in how they treat the overlaps between distributions. The addition method makes more sense from a theoretical perspective: If each distribution is a separate phenomenon that is producing data points, then each simple distribution has some chance of putting a data point in any given spot, so the probability that we’ll get a new data point in that spot should be the sum of all the simple distributions. However, (as we’ll see below) the maximum method is much easier to deal with in practice because when we look at a single point, we can essentially ignore the effects of all but one of the distributions. Luckily, if the distributions that we’re combining are reasonably far from each other then their density values along the overlaps will be low enough that there often is not a huge difference between the two methods. So, it is common stick with the maximum method for ease of computation.

So, now that we know how to combine our simple distributions, we have two problems: The first is to decide how many simple distributions to combine and what types to pick. If you happen to already know something about the data set, such as a theoretical framework or some other form of domain knowledge, then this might be used to select the types of simple models. Otherwise, selecting models can be a difficult and subtle problem, similar to the problem of choosing the appropriate kernel for a data set. This problem generally falls under the category of unsupervised learning (the machine learning term) or descriptive analytics (the data mining term). In a few weeks, I’m going to switch gears and start covering unsupervised learning/descriptive analytics, but for now lets assume that we have somehow managed to choose the types of simple distributions we want to combine in our model.

The second problem is to choose the parameters for each simple distribution. Recall that the parameters for a regression or logistic distribution determine the line/plane/hyperplane that the distribution is based around. For a Gaussian blob, the parameters determine what directions the blob will be stretched in, and by how much. The set of parameters for the mixture model is the union of all these parameters for the different simple models. Moreover, if we run any of the distributions through a kernel, then that will give us even more parameters for the mixture model.

Recall that in the post on regression, we saw how we can fit a single model to a data set by trying to maximize the values of all the data points with respect to a probability density function. Specifically, we chose the parameters that give us the largest number when we multiply together the density values of all the points in the data set, giving us the distribution that is “most likely” to have produced the data set. This is what we called maximum likelihood estimation.

In practice, we often can’t calculate this value for every single possible distribution, so we’ll use what’s called gradient descent: We start with a randomly chosen distribution, then figure out what direction to nudge the parameters in to improve the probability score a little. (The gradient is what tells us how to do this.) Then we make these changes to the parameters and again figure out how to further nudge the new parameters of this new distribution, and so on. The process stops when we can no longer make small adjustments that improve the score. We saw this approach in the post on logistic regression, and it is very similar to the basic method for training neural networks.

We’ll select the parameters for a mixture model by a closely related algorithm called Expectation Maximization. We start by choosing initial parameters for each or our simple distributions. We can choose random parameters, though if we have another way of getting an initial estimate (such as domain knowledge) this will often give is a better final answer. Then we want to start adjusting these parameters. However, when we determine how to adjust the parameters, we don’t want to use all the data points for each simple distribution. From a theoretical perspective, most of the data points will have come from other simple distributions in the mixture, so each simple distribution should only care about the data points that it is likely to have produced. From a practical perspective, if we use all the data points for each distribution, we’ll probably end up with all the simple distributions having the same parameters, rather than having them nicely spread throughout the data set.

So, how do we determine which distribution was mostly likely to have produced each data point? This choice is an example of what’s called a latent variable in statistics: The value is not part of the initial data set and is not part of the final answer, but we need to choose a value in order to determine the final answer. It’s sort of like the middle layers of neurons in a neural network, playing a major behind-the-scenes role. In fact, these middle neurons were essentially how neural networks solve the equivalent problem, by having the middle neurons decide how to adjust the parameters of each earlier neuron when looking at a new data point.

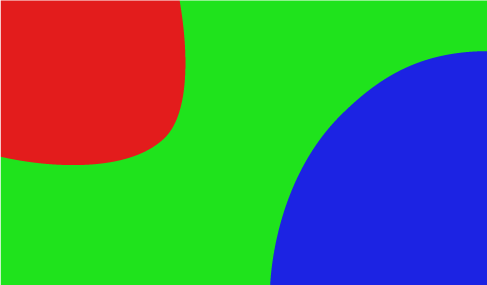

But for mixture models, we’ll use a much simpler method than what we saw with neural networks: For each point in our data set, we will calculate its density/probability in each of the simple models with our initial parameters, then assign it to the simple distribution in which it scores the highest. The Figure below shows how the we divide points in the data space between the three simple distributions in the mixture model from the beginning of the post. Points in the red and blue regions get assigned to the Gaussian blobs, while points in the green region go to the linear regression distribution. Essentially, this method treats the data points as if they were produced by combining the simple distributions using the maximum method, since it only allows each data point to be attributed to one distribution. This is the first step in the Expectation Maximization algorithm.

For the second step, after a collection of data points has been assigned to each distribution, we have each simple model pretend that this collection of data points was the entire data set, and adjust its parameters accordingly. In other words, we figure out how to make each simple distribution better fit the points that are assigned to it (i.e. with gradient descent) and make these adjustments.

Once we’ve done this for each of the simple distributions, we note that the way we divided the data set between the different simple distributions may no longer be up to date: Some of the distributions may have shifted so that a data point now gets a better probability/density value in a different simple distribution. So, we return to step one and re-divide the data points. Then we re-adjust the distributions accordingly, and so on. At some point, the way we divide the points between the simple distributions will become consistent and we will run out of ways to improve the parameters. At that point, we’ll stop and use whatever collection of parameters we have for the different simple distributions to define the overall distribution.

As with any of these algorithms, we should consider its benefits and limitations. First, note that unlike regression and classification algorithms, mixture models do not explicitly predict anything about new data points. Instead, they give a nice description of data sets with more complicated structure. In particular, because they have at their core a small number of relatively simple models/distributions, they can produce a description of a data set that could potentially be interpreted by a human. In many situations, this will be more valuable than a black box classification or regression algorithm.

There are two major technical limitations: First, the initial values that we choose for the parameters can make a big difference in the final result. Since each step in the expectation maximization procedure only makes incremental changes, it can get stuck in configurations in which there is no small change that will improve things, but there are are larger changes that would produce a much more accurate model. Think of a ball rolling down a hill that gets caught in a small divot half way down. In order to get it to roll the rest of the way down, you have to push it up slightly first. However, the Expectation Maximization algorithm has no procedure for nudging a set or parameters out of analogous divots.

The second limitation is that the quality of the final model/distribution is very dependent on the choice of the number and types of the simple distributions in the mixture. As I mentioned above, if you don’t have a good external reason to choose a particular collection of simple models, this can be very difficult to determine. This is one of the motivations for unsupervised learning/descriptive analytics, which I’ll begin discussing in two weeks, after a brief digression next week into Gaussian kernels.

Pingback: Gaussian kernels | The Shape of Data

Pingback: K-means | The Shape of Data

Pingback: Intrinsic vs. Extrinsic Structure | The Shape of Data

Pingback: The shape of data | spider's space

Pingback: Clusters and DBScan | The Shape of Data

Pingback: Modularity – Measuring cluster separation | The Shape of Data

Pingback: PageRank | The Shape of Data