In the next two posts, I plan to introduce the classification algorithm called an Artificial Neural Network (ANN). As the name suggests, this algorithm is meant to mimic the networks of neurons that make up our brains. ANNs are one of the classic tools of artificial intelligence and were initially defined and developed based entirely on the biological model. However, it was discovered early on that they also have a very simple geometric interpretation that fits nicely into the general framework I’ve outlined so far on this blog. In this week’s post, I’ll describe how a single “neuron” in an artificial neural network functions, then next week I’ll explain how they can be combined to form models with very sophisticated geometric structures.

First, we should note that in most classification algorithms, there are essentially two modes of operation: training and evaluation. In the training phase, we choose the parameters for the model/distribution, for example by finding the plane that best separates the two classes of data points in SVM or logistic regression. In the evaluation phase, we examine new (unlabeled) data points and predict which class they are in. In past posts, I didn’t define these two phases, but they were implicit in the conversation. When considering neural networks, however, it will help to be very explicit about the difference between training and evaluation. In particular, we will start by understanding how a neuron behaves in the evaluation phase, then use what we know about the evaluation phases when we examine the training phase.

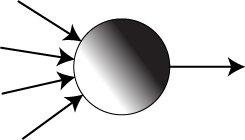

A single neuron in a neural network can be thought of as a box with a number of inputs and one output, as in the Figure on the right. It reads in the numbers from the inputs on the left, then uses them to calculate a number for the output on the right. (In a neural network, the input values may come from other neurons and the output will be attached to the inputs of other neurons, but that’s for next time.) The output value will usually be between 0 and 1, though this is not entirely necessary. If we think of the input values as coordinates of a point, then we can think of the output value as the value of a probability distribution (or rather a probability density function) at that point.

A single neuron in a neural network can be thought of as a box with a number of inputs and one output, as in the Figure on the right. It reads in the numbers from the inputs on the left, then uses them to calculate a number for the output on the right. (In a neural network, the input values may come from other neurons and the output will be attached to the inputs of other neurons, but that’s for next time.) The output value will usually be between 0 and 1, though this is not entirely necessary. If we think of the input values as coordinates of a point, then we can think of the output value as the value of a probability distribution (or rather a probability density function) at that point.

In other words, the neuron will keep track of some model/probability distribution, such as the kind that we used for regression. After we set the input values to the coordinates at a certain point, the neuron looks up the value of the distribution at that point (i.e. how dark the distribution cloud is) and outputs that value. Note that this is not exactly how ANNs were originally defined and understood. However, going from the original definition to this viewpoint requires a lot of technical details that I don’t want to go into.

Like the distributions that we saw in regression and generalized regression, the model/distribution that the neuron keeps track of will be defined by some number of parameters. In general, this distribution could be fairly complicated, but for the basic neural network, it’s a simple linear model defined by the distance to a line/plane/hyperplane. In fact, the traditional neuron model is the the logistic distribution used in logistic regression, where the points on one side of the line/plane/hyperplane take values close to one, and the points on the other side take values close to zero.

So we haven’t done anything new yet; we’ve just introduced new terminology for describing classification algorithms. What makes neural networks powerful is the way that they allow you to combine the models/distributions in the different neurons. But in order to combine the neurons, we need a general way to go about the training phase of the classifiers. With SVM and logistic regression, we trained the model on all the data points at once. In SVM, that meant looking at all the data points in order to find a decision boundary that is as far from the two classes as possible. For logistic regression, that meant choosing a distribution that minimized the difference between the class values of the points and the values in the distribution. Both of these training phases are specifically designed for the particular model/distribution. For neural networks, we will need a single training process that can work for any model.

There are a number of possible ways to train an artificial neural network, but I will describe one that is particularly simple and (from what I can tell) one of the earliest developed methods. For our training phase, we will only be able to ask the model two things: 1) What is the output for a given point? 2) How can we adjust the parameters of the model to most efficiently increase or decrease the output for that point?

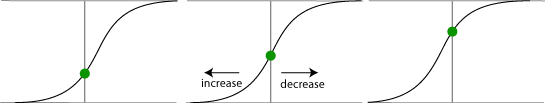

The first question is just the evaluation phase. (That’s why we discussed the evaluation phase first.) The second question is a bit tricky, but we can see how it works with the logistic function. In the Figure below, we have three different logistic functions, evaluated at the same point. It may look like we’re moving the point right and left, but we’re not – it’s only moving relative the logistic curve. If we push the logistic curve to the right (as on the left side of the Figure), the point moves down. If we push the logistic curve to the left (as on the right side of the Figure), it moves up. This has to do with the fact that the slope of the logistic curve is positive, and can all be worked out with a bit of calculus.

For higher dimensional distributions such as the higher dimensional logistic distribution, we have to use a concept from multi-variable calculus called a gradient, rather than a slope, but the idea is the same: The gradient tells us what direction to adjust the parameters of the model in order to increase or decrease the output returned by the neuron at a given point.

To use this in the training phase of the neuron, we will assume that each data point in the training set is assigned a label of either 0 or 1 (green or blue.) We will have the neuron evaluate the first data point and compare its prediction (which will be a value between 0 and 1) to the actual label. If the prediction is correct, then we move on to the next point. But if it’s incorrect (which will always be the case if we use a logistic model, since it only takes values between 0 and 1, but not exactly 0 or 1), we will want the value to be either higher or lower, so we ask the neuron how to adjust the parameters to increase or decrease the value of the model at that point, respectively. We then make these adjustments to the parameters and move on to the next data point.

We can think about this in terms of having the data points push on the the decision boundary, similar to my description of logistic regression: With the logistic distribution, the decision boundary is the line/plane/hyperplane between the points where the distribution is greater than 1/2 and the points where it’s less than 1/2. If a point labeled 1 is on the side of the decision boundary that’s greater than 1/2 then it’s on the correct side, so it pushes the line/plane/hyperplane away to increase the margin for error. But if it’s on the wrong side then it pulls the decision boundary towards itself, in the hopes of ending up on the correct side. (However, to allow for noise in the data, we won’t necessarily move the decision boundary all the way to the correct side of the data point.)

Each time we evaluate a single data point and adjust the parameters, the distribution associated to the neuron should get a little better. Once we make it through the entire data set, we can then improve it further by repeating the process a few more times. After enough runs, we will usually get to the point where, since the model cannot fit all the data points with 100% accuracy, the data points will start to push the model back and forth, and things stop getting better. So a common practice is to slow down the rate at which the neuron adjusts its parameters each time we run through the entire data set, in the hope of settling into a good compromise.

Note that for a single neuron, this approach to training is not as effective as other methods such as logistic regression. With logistic regression, we calculate which direction to adjust the parameters to improve the overall scores of all the data points simultaneously. This makes it possible to guarantee that the distribution we find is a close to the best possible distribution. With the training process for Neural networks, we don’t have such a guarantee since we only look at a single data point at a time. However, this turns out to be a better process for neural networks with multiple neurons because among the distributions that can be defined by a network, there often will not be a single best distribution. But to see what I mean by this, you’ll have to wait for the next post.

Pingback: Neural Networks 2: Evaluation | The Shape of Data

Pingback: Neural Networks 3: Training | The Shape of Data

Pingback: Decision Trees | The Shape of Data

Pingback: Intrinsic vs. Extrinsic Structure | The Shape of Data

Pingback: The shape of data | spider's space

Pingback: From Colah’s Blog: Neural Networks, Manifolds, and Topology | Thinking Machine Blog

Pingback: Classifying Olympic Athletes by Sport and Event (Part 2) | Matt Dickenson

Pingback: Convolutional neural networks | The Shape of Data

Pingback: Convolutional neural networks | Data Science World

Pingback: Genetic algorithms and symbolic regression | The Shape of Data

Pingback: GPUs and neural networks | The Shape of Data

Pingback: Recurrent Neural Networks | The Shape of Data

Pingback: Neural networks, linear transformations and word embeddings | The Shape of Data

Pingback: Neural networks, linear transformations and phrase embeddings | A bunch of data

Pingback: GPUs and Neural Networks | A bunch of data

Pingback: Rolling and Unrolling RNNs | The Shape of Data

Pingback: Recurrent Neural Networks | Open Data Science

Pingback: Rolling and Unrolling RNNs | Open Data Science

You really have a gift for exposition. What I love about these posts is that if the reader knows a bit of math they can easily fill in the technical details. As Thurston said somewhere, given some training it’s easy to go from conceptual understanding to symbolic understanding; but traditional textbooks are written to go in the opposite, more difficult direction.

Have you ever considered writing a popular book on some topics in modern math, say Seiberg-Witten theory or whatever your speciality in math used to be? I’m thinking of something along the lines of Ash and Gross’ book Fearless Symmetry, where they bravely attempt to give an account of galois cohomology. You’ll be surprised by how many people would love to read something like that (as is also evidenced by the fact that Ash and Gross have gone on to write two other books of a similar nature).

If some one needs expert view regarding running a blog after that

i suggest him/her to pay a visit this webpage,

Keep up the nice work.