I occasionally like to look at the ongoing Kaggle competitions to see what kind of data problems people are interested in (and the discussion boards are a good place to find out what techniques are popular.) Each competition includes a way of scoring the submissions, based on the type of problem. An interesting one that I’ve seen for a number of classification problems is the area under the Receiver Operating Characteristic (ROC) curve, sometimes shortened to the ROC score or AUC (Area Under the Curve). In this post, I want to discuss some interesting properties of this scoring system, and its relation to another similar measure – precision/recall.

Recall that in a classification problem, you’re given a training set consisting of data points with labels (we’ll call the labels A and B) and an evaluation set of data points without labels. The problem is to assign labels to the points in the evaluation set. If someone else (such as the Kaggle organizers) happens to know what the labels on the evaluation data points should be, they can calculate the accuracy of your answers by taking the number of correct labels and dividing it by the number of points in the evaluation set. So, if you got them all correct, the accuracy is 1. If you guessed at random, you would expect the accuracy to be around 0.5. (And if you get anything below 0.5, then you probably did something very very wrong.) There are some issues with this method of scoring, such as that if 90% of the evaluation points are supposed to have the same label, then you can get 90% accuracy by guessing that label for all the evaluation points. As it turns out, the ROC score fixes this issue, though there are also other reasons to use it.

Sometimes, in addition to labels for each point, your classification algorithm can also give you some measure of “confidence” in the predicted label. For example, the logistic regression algorithm technically doesn’t assign labels to data points – it assigns values from 0 to 1. We can interpret data points with low values as being likely in class A, and high values as likely in class B (assuming we set up the training algorithm that way). In particular, the lower the value, the farther it is from the decision boundary on the A side, so the more “confident” we can be in that label. Similarly, higher values are deeper into the B side of the decision boundary, so we can confidently assign label B. However, values near 0.5 are right near the decision boundary, so even a little bit of noise could have pushed them into the wrong class.

You can do something similar with random forests as well: For each point in the evaluation set, take the number of decision trees in the forest that assign label B to the point, then divide by the total number of trees. You can use this number the same way as the number from logistic regression: if most of the trees agree on the label, then the resulting low or high value tells you to have high confidence in label A or B, respectively.

Given all the scores of this form for the points in the evaluation set, you could then classify each of the evaluation points by assigning class A to all the points with value less than 0.5, and class B to all the points with value about 0.5, then calculate the accuracy. But this essentially throws away the “confidence” information. Precision/recall and the AUC/ROC score are two ways of using the confidence information in evaluating how well the points were classified.

To understand the ROC curve, imagine that instead of handing all your labels in to the Kaggle judge at the same time, you were asked to tell the judge which data points you think have label A, one at a time, starting with the ones you’re most confident in. Moreover, you have to keep going until you run out of data points, which means you even have to include on this list the points that you think should have label B. But the points that you predict label B for will go at the end: high confidence that a point has label B counts as (very) low confidence that it has label A. So if you had a value from 0 to 1 for each point, e.g. from your logistic regression or random forest algorithm, you would start with the point with the lowest score, then the next lowest scoring point and so on. (Equivalently, you could switch the roles of A and B, and start with the highest scoring points.)

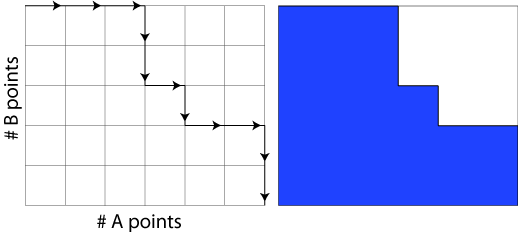

As you’re reciting this list of points, the judge (who knows the correct answers) keeps track as follows: They start by placing the tip of a pen at the top-left corner of a grid of squares. Each time you name a data point that really has label A, they move the pen to the right one square, drawing a horizontal line. Every time you name a B data point, they move the pen down one square, drawing a vertical line. The resulting path might look something like the one in the Figure below.

During this process, the total number of times they move their pen to the right is the number of evaluation data points that really have label A (let a be this number), and the number of times they move it down is the total number of points that really have label B (call this b.) So no matter what order you name the points in, their pen will go right a total of a times and down a total of b times, ending up at the opposite corner of a rectangle with dimensions a x b. The resulting curve splits this rectangle into two pieces and the “area under the curve” is the area of the lower piece, shown in blue on the right above. We want the ROC score to go from 0 to 1, just like accuracy, so the ROC score is the area of this lower piece divided by the total area of the rectangle.

But what does this number mean? Well, the best you could do would be to name all the points that actually have label A first. As you’re doing this, the judge’s pen moves across the top of the rectangle. When it gets to the upper right hand corner, you have to keep naming points, so the pen moves down the right side of the triangle as you name all the B points. In this case, the area below the curve is the entire rectangle, so the ROC score is 1. On the other hand, if you were unfortunate enough to name all the B points first, the resulting curve would follow the left and bottom sides, for a score of 0.

Now the hard question: What would we expect the ROC score to be if we named points at random? Or put more precisely, what would the average ROC score be over all the different possible orders that we could name the points in? Rather than trying to work out all the possible orders and calculating the average, there’s a symmetry argument that will give us the answer: For any given order, if we were to name the evaluation points in the exact opposite order, the resulting ROC curve would be the result of rotating the original curve by 180 degrees. This rotation swaps the above and below regions, so the sum of the areas under the two curves is equal to the area of the rectangle. The average of the two areas is therefore half the area of the rectangle and the average score is 0.5. Every possible order of the data points falls into a unique pair with average score 0.5, so the overall average of all the scores is also 0.5. (This is true no matter how many points are in classes A and B.)

In other words, the expected score for a random order is 0.5, the same as the accuracy. Note that this is the same score we would get if we replaced the ROC curve with a straight line between opposite corners. This is important for understanding how the ROC curve behaves if you have multiple points with the same confidence score. For example, lets say that you have three points that get a score of 0.2 from logistic regression, and it happens that two of these really have label A, while the third has label B.

If you were to randomly choose an order to name them in, the point with label B could come first, second or third, and each of these possibilities would lead to a different final ROC score. However, you can apply a symmetry argument similar to the one above to see that the average score between these three possibilities is the same as the score you would get by drawing a diagonal line over two squares and down one.

So in general, when there are multiple points with the same confidence value, the convention is to draw the ROC curve with a diagonal line that goes across by the number of actual A labels and down by the number of B labels in the set. So, for example, if you gave all the points in the evaluation set a score of 0.5, the ROC curve would just be a diagonal line between opposite corners of the rectangle and the ROC score would be 0.5. On the other hand, if you gave some of the points label 0 and the rest label 1, then the ROC curve would consist of two diagonal lines that meet at a point whose coordinates correspond to how many points you labeled correctly.

With a little work (that I’ll let you figure out yourself), you can show that the ROC score in this case is equal to the number you get by calculating the correctly labeled A points divided by the total number of A points, then calculating the number of correctly labeled B points divided by the total number of B points, and averaging these two numbers. This isn’t exactly the accuracy, as defined above – it’s more like a weighted accuracy that takes into account the size of each class. In fact, it’s better than accuracy because it solves the problem that I mentioned at the top of the post: If you have an evaluation set with 90% of the points labeled A, and you guess label A for all of them, while the accuracy would be 90%, this “weighted” accuracy defined by the ROC score would be 0.5, the same as random guessing since the ROC curve would be just a diagonal line.

In fact this brings up an interesting point: The ROC score when we assign all points a confidence score of 0.5, or when we assign them all 0, or for that matter if we assign them all the same number, no matter what that number is. More generally, since the ROC is determined entirely by the order of the points, it doesn’t matter if the confidence scores run from 0 to 1, or -3 to 5, or any other scale.

So, now that we understand the ROC score, what about precision and recall? It turns out these two measures are essentially a way of describing a single point on the ROC curve. Imagine if, instead of asking you to name evaluation points one at a time, the judge asked you to provide a single set of points, of any size you choose, with points that you think should have label A. This list doesn’t need to contain all the point you think have label A, but it shouldn’t contain any points that you think have label B.

The recall of the list you provide is the number of points on you list that really have label A divided by the total number of points in the evaluation set that really have label A. The precision of your list is the number of points on you list that really have label A divided by the total number of points in the set you provided. Notice that the only difference between these two values is the denominator (the value that you divide by), and both recall and precision take values between 0 and 1.

But to really understand what these values mean, we should consider how they’re related for different sets of points. You already have an order on the evaluation points defined by your confidence score, so why not play the precision/recall game once with the first point in the confidence ordering, then a second time with the first two points, then again with the first three points and so on. What happens to the precision and recall as you do this?

Well, as you get farther along the list, you will (at least occasionally) add more points that really have label A, so the numerator in both recall and precision goes up or stays the same. As the list gets bigger, the denominator in the recall stays the same, so the recall will go up each time you add another point with label A to the list and stay the same when you add a B point. On the other hand, the denominator on the precision goes up by one each time you add a point to the list. So, the precision will go up when you add an A-labeled point, and will go down whenever you accidentally add a B-labeled point.

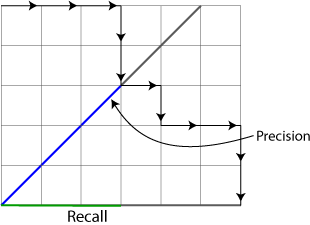

In fact, it turns out you can read the precision and recall off of the ROC curve. At each point that you stop on while drawing the ROC curve, recall is how far to the right the pen is divided by the total width of the rectangle. Precision, on the other hand, is a bit tricky to read: Given a point on the ROC line, draw a diagonal line up and to the right at a 45 degree angle until it hits the left edge and the top edge of the rectangle, as in the Figure below. (You may have to extend these edges down and to the right, respectively.) The distance from the point on the ROC curve to the bottom left endpoint of this line segment, divided by the total length of the line segment is the precision. (I’ll again leave it to the reader to work out why this is true.)

The goal of the precision/recall game is to make both precision and recall simultaneously as high as possible. However, unlike the ROC score where you get a well defined score, with precision and recall, there may not be a single pair. For example, you might get 90% precision with 20% recall, but only 70% precision with 90% recall. Which of those precision/recall pairs is better/more important depends on the application.

Excellent explanation. Finally helped me understand AUC / ROC!

Reblogged this on josephdung.

Pingback: Condensed News | Data Analytics & R